Last year I worked on a team migrating a large application to ASP.NET Core from ASP.NET MVC 5. Among our goals we wanted to make the site use responsive layout, become “future-proofed” on a technology stack, and clean-up a bunch of legacy cruft. Our initial launch did not go smoothly and we reverted to the previous site to make changes. In the process we learned some “gotchas”. Today I’m going to discuss one of those and how we addressed it. We’ll learn about throttling requests in .NET Core web applications.

Code for this post can be located on my GitHub.

Understanding the (our) problem

ASP.NET Core is a complete rewrite of the platform from the ground up. The basic premise here was that ASP.NET, as a platform, grew fat and interdependent on it’s features. The team rebuilt Core in a way that you could use the features you want by way of middleware. Additionally core added it’s own lightweight server you can use — Kestrel. Microsoft suggests you also use a full featured server and reverse proxy to Kestrel for most applications.

One thing we learned is different is how IIS handles things. When you use ASP.NET MVC full framework with IIS it queues and handles your requests “properly” by default settings. IIS no longers uses a queue setting on the application pool as of IIS 7 and “integrated mode”. Instead, there are deeper settings in the ASP.NET configuration chain that handle them.

I’ll be the first to admit that I was lax on learning said configuration options to begin with. So after spinning up our ASP.NET Core application as a reverse proxy on IIS things quickly got out of hand. I was completely unaware of how to approach it. It quickly became quite apparent our problem was due to IIS not throttling or queueing our requests appropriately. I just didn’t know how to correct it.

ApplicationPool

An answer on StackOverflow suggests that you can modify the applicationPool for your particular application (on IIS 7+). Allegedly that should apply to your ASP.NET Core application via the reverse proxy. In my particular use-case I have several Windows Server 2008 R2 servers for test instances. I attempted putting those settings in the individual web.config and the application failed to launch. Unfortunately I cannot apply it a higher level (machine level config). This is because there are applications I don’t want it to affect. Furthermore intellisense for the web.config in Visual Studio Pro 2017 on Windows 10 tells me that setting is invalid.

There was an overall “urgency” needed to fix our problem. The seemingly conflicting and/or incomplete information led us to the solution we implemented. Now that things have simmered down I do plan on taking a deeper look at all this. Who knows, maybe I’ll find out that we don’t need today’s post after all. I’d be ok with that.

The major takeaway here, however, is that you cannot come into ASP.NET Core development with any preconceived notions or expectations. It probably doesn’t apply. We made assumptions about how things would work based on experience in traditional ASP.NET MVC. That didn’t pan out.

If you are interested in learning more about our path then read up on the “History” section after the “Conclusion”. It’s thick.

Setting it up

I’m not going to pretend I was the one that came up with this solution. While I could have come up with this solution I was too stressed out. Luckily Tomasz Pęczek came up with it first. His linked GitHub repository has two versions for handling locking; we decided to go with the semaphoreslim version. The premise of his education project was exactly about throttling requests in .NET Core web applications.

Tomasz has an entire blog post written about his implementation I’m only going to focus on the changes I added. Let’s start with the MaxConcurrentRequestsOptions.cs class.

MaxConcurrentRequestsOptions

I added the following properties:

public bool Enabled { get; set; } = true;

public string[] ExcludePaths { get; set; }It is nice to be able to turn the queuing/throttling on and off easily per a configuration option. Frequently you’ll also want to be able to exclude certain paths. A sample of the config on one of the test servers had the following JSON values:

"MaxConcurrentRequests": {

"Enabled": true,

"Limit": 50,

"MaxQueueLength": 75,

"MaxTimeInQueue": 15000,

"LimitExceededPolicy": "FifoQueueDropTail",

"ExcludePaths": [

"/error",

"/css",

"/js",

"/dist"

]

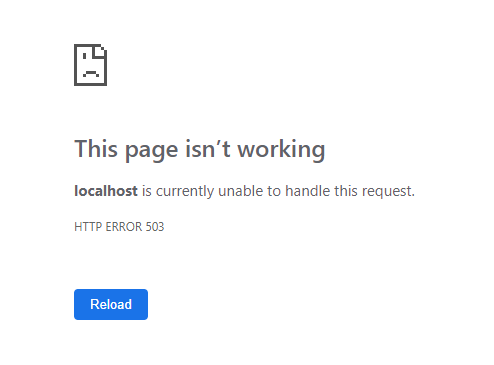

}The above config excludes the error controller path. It also excludes compiled/dynamic CSS and JS and lastly our “dist” folder. Except for the error handler, these are all things I don’t want throttled since they cause a partial page load. Oops! In the case of the error handler I actually wanted a “friendly” error to show up for the 503 error. 503, btw, is the error we’re throwing from the throttler when it gets too busy. Please don’t confuse this with the 502.3 which happens when our “proxy” fails to respond (such as Kestrel to IIS).

To be honest, error handling with a reverse proxy (IIS) can be a real pain in the butt sometimes. If we write an error response in .NET Core then IIS would consider it handled. IIS, through web.config, has several settings to override this. My attempts to have IIS handle the “unhandled” ones would break the others. I finally opted to allow .NET Core to handle it through-and-through even for the 503.

CAUTION: One thing you need to be careful with is having too many exclusions. If you’re looking at implementing queuing/throttling in the first place then it’s likely because you’re overwhelming the application, right? Denial of service anyone?

MaxConcurrentRequestsMiddleware

Given I added a couple modifications to the options it is only natural to assume I had to modify the middleware itself, right? Changes here are actually pretty minimal. The code that follows are modifications I added to the beginning of the public async Task Invoke(HttpContext context) method call (included below for completeness sake).

First, I added the ability to skip the middleware if we matched any of our exclusion paths. Second, we check to see if the middleware is enabled. Given some changes added in the MaxConcurrentRequestsMiddlewareExtensions class that secondary check for enabled won’t actually ever be a false. I like to be certain that other devs don’t screw it up later so I check again.

public async Task Invoke(HttpContext context)

{

if (_options.ExcludePaths.Any(path => context.Request.Path.Value.StartsWith(path)))

{

await _next(context);

return;

}

if (_options.Enabled && CheckLimitExceeded() && !(await TryWaitInQueueAsync(context.RequestAborted)))

{

if (!context.RequestAborted.IsCancellationRequested)

{

IHttpResponseFeature responseFeature = context.Features.Get<IHttpResponseFeature>();

responseFeature.StatusCode = StatusCodes.Status503ServiceUnavailable;

responseFeature.ReasonPhrase = "Concurrent request limit exceeded.";

}

}

else

{

try

{

await _next(context);

}

finally

{

if (await ShouldDecrementConcurrentRequestsCountAsync())

{

Interlocked.Decrement(ref _concurrentRequestsCount);

}

}

}

}MaxConcurrentRequestsMiddlewareExtensions

This brings us to my final set of modifications. These changes resolve the options and, if not enabled, we simply skip adding the middleware. I also added an extension method for setting up the IOptions configuration.

My personal take is that if I’m setting up something for “easy” consumption, I’d rather then end-user not actually have to know specifics about all the configuration and what-not. They should just be able to call into my middleware and let it handle the rest. Without further ado, here is the class in it’s fullness:

public static class MaxConcurrentRequestsMiddlewareExtensions

{

public static IApplicationBuilder UseMaxConcurrentRequests(this IApplicationBuilder app)

{

if (app == null)

{

throw new ArgumentNullException(nameof(app));

}

var throttlingSettings = app.ApplicationServices.GetService<Microsoft.Extensions.Options.IOptions<MaxConcurrentRequestsOptions>>();

if (!throttlingSettings?.Value.Enabled ?? false)

return app;

return app.UseMiddleware<MaxConcurrentRequestsMiddleware>();

}

public static IServiceCollection ConfigureRequestThrottleServices(this IServiceCollection services, IConfiguration configuration)

{

services.Configure<MaxConcurrentRequestsOptions>(configuration.GetSection("MaxConcurrentRequests"));

return services;

}

}Putting it together

I didn’t feel like the demo application Tomasz set up really helped demonstrate things in an easy to see kind of way. His unit tests did a great job with things but somethings you just gotta experience it yourself. Instead, I decided to take all of his code (middleware, options, etc) out of the demo application and make a class library for it. This library is called MaxConcurrentRequests.Core. Additionally, I created a new web application to test the throttling on it and called it MaxConcurrentRequests.Demo.

In the demo I’m just leaving all the default generated web application stuff in there, added a reference to our class library, and then set up the configuration with a limit of 1 and max queue length of 2. I left everything else from the sample configuration above the same (15000 ms queue time, exclude paths of error, css, js, and dist, and FifoQueueDropTail).

For better testing, I also added an action method with view that simulates taking 5s to render. Look, it ain’t perfect and it does a pretty poor job of simulating the behavior we were running into but given enough spamming we manage to trigger the hard lock. To trigger it I just CTRL+Click spam the menu options until some of them start failing per the Chrome message below:

Taking it farther

So let’s face it, the default error messages are kinda lame. .NET Core allows you to set up custom error handling for status codes, right? This is exactly the use-case I came up with that initially made me implement the path exclusions for the throttling. It became apparent that I needed to exclude the ErrorController base path (and thus all child paths) from the throttling. Lastly, I set up a view for the 503 error code (among others) in my status code handler.

Conclusion

This is absolutely not the ideal way to handle queuing/throttling in .NET Core but absent any good documentation this is the direction I took. Now that the smoke has cleared I do intend on really digging deep and seeing if I can figure out different/better ways to handle this with IIS. On the surface I am at least aware of a potential setting in NGINX that can handle this.

DotNetCore 2.2 just released and Scott Hanselman blogged about some improvements particularly with IIS that it has. I have full intentions of researching that in particular to see if we can make things work without this additional middleware.

Remember that all my code for this post is found on my GitHub.

History Lesson

I promised a history lesson if you cared enough about it so… here you go!

The symptoms

We launched the newly completed website early one morning and monitored the logs for the first two hours. After that point in time I had some conflicts in my schedule (we released on a weekend which is a different story for a different time) and had to go. While I was gone the server came to a standstill and “crashed”. In a panic mode the application was restarted and monitoring once again commenced. It “crashed” again within a couple hours.

First off we needed to understand the symptom. As the site grew busier the requests would start taking longer and longer (naturally, to a degree) but eventually they would get to the point they would never complete. Subsequent requests during this time would start getting a 502 that the gateway was failing to respond. In this case, that meant Kestrel as the reverse proxy was failing to respond.

Later we would learn that it actually didn’t crash and if we waited long enough (like ten minutes, no joke) with no new requests the application would recover. Obviously that isn’t something that would happen live so we had to fix it.

Initial Findings

Our initial week after the “crashes” led us to a few conclusions that certainly helped the overall outcome but were not individually the solutions. One of those was simply that we were flooding the Kestrel server with requests that used to be handled through IIS previously — static content. I ended up following advice by Rick Strahl and had IIS intercept all those rather than pass them through Kestrel. Initial results were promising so we tried another deploy. And rolled it back.

We decided that we still had a lot of dynamic images being served up through an API on the site and maybe that was part of the problem. I spun up a CDN application whose sole purpose was to serve up (and cache) those images.

Additionally we came across several posts on the internet with similar issues as us that suggested we needed to use async/await as much as possible with ASP.NET Core. We followed every API call and controller method we had, traced to the root service calls, and then worked our way back out.

We deployed a third time. And rolled it back.

K6 Load Testing

Eventually we became acquainted with a load testing tool named K6. Using this tool we were able to actually reproduce the *real* issue on our test servers and in development. This led us to some code that was hammering a service for data and the busier it got the longer it took. Duh. We ended up implementing some heavy caching on that data since it didn’t change often. It turned out this was our panacea so to speak. K6 finally got us to the point we could deploy successfully with some caveats.

With K6 we identified our top load the site could handle before degrading to a point of unusability. Luckily that number was higher than the old site was handling (something we learned by also using K6 to test it). We made use of the Kestrel limits MaxConcurrentConnections and MaxConcurrentUpgradedConnections (see below) to cut requests off at the knees.

.UseKestrel(config =>

{

config.AddServerHeader = false;

config.Limits.MaxConcurrentConnections = Convert.Standard.StringToNullableInt64(System.Configuration.ConfigurationManager.AppSettings["MaxConcurrentConnections"]);

config.Limits.MaxConcurrentUpgradedConnections = Convert.Standard.StringToNullableInt64(System.Configuration.ConfigurationManager.AppSettings["MaxConcurrentUpgradedConnections"]);

var keepAlive = Convert.Standard.StringToNullableInt(System.Configuration.ConfigurationManager.AppSettings["KeepAliveTimeout"]);

if (keepAlive.HasValue)

config.Limits.KeepAliveTimeout = TimeSpan.FromMinutes(keepAlive.Value);

})Similarly if you switch from Kestrel to HTTP.sys (which cannot be reverse proxied from IIS), you can handle some of the queuing and max connections options directly. These have all the same settings you would normally see on an application pool in IIS. This wasn’t going to work for us, we already had other production applications on the server running in IIS and didn’t want to deal with spinning up something separate.

All the above was enough for us to successfully launch but we still noticed that the site would cut requests off from time to time under high usage. This made sense, of course, because we were forcing a max request size. The team (developers and QA) were extremely nervous the site wouldn’t be able to handle what we anticipated to be a very busy sale.

Adding the Queuing/Throttling

It was time to pull the trigger. Since I had previously bookmarked Tomasz’s blog post and after another round of Google searches and StackOverflow dives I felt that I’d give his experimental code a try. I spun up a new branch and started plugging it in. Ultimately it was a comment on his blog post by Ron Langham that prompted me to bite the bullet and go for it.

I am wondering if this will help with the problem I am having, or if you are familiar with the options in the .net core 2.0 kestrel that would help me. We are trying to migrate from .net 4.5 web api running on windows and iis to .net core 2.0/linux/kestrel. Problem is that when I do load testing with 4000 concurrent constant connections on my new server, it just craps out it I have some async database access that may take 200-300ms or so per request. On the Windows/IIS implementation it runs great, the client requests all get processed and not 503/etc errors. On the AWS Linux implementation it seems to overwhelm the instance, the client eventually starts getting 503s and AWS terminates the instance due to the server can’t respond to the health checks.

I am thinking it may have to do with a queue limit that IIS provides similar to maybe what you provide here. That the IIS will throttle the requests and not let too many acutally hit the backend code all at the same time. Does Kestrel provide this option in .net core 2.0?

Ron Langham

I got everything in place and onto our test servers and then spent hours fine-tuning the limit/queue for our application as well as identifying the need for path exclusions and then tweaking back and forth with web.config trying to get a “friendly” 503 to render. Throttling the requests was ultimately our solution.

In the end it has been in production now for over a month and running just dandy.